Composable Intelligence: What It Takes to Achieve (and Sustain) Mission-Aligned AI

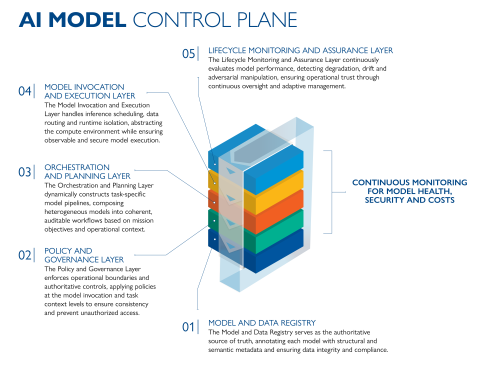

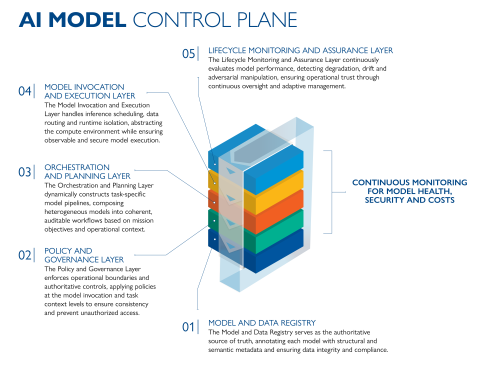

An AI Model Control Plane orchestrates, safeguards and enables composable AI —managing the full model lifecycle through six principles that ensure resilience and interoperability

The first phase of the AI revolution was about building smarter models. The second phase—the one that will determine long-term success—is about building smarter systems. And while there’s no shortage of players chasing the next breakthrough algorithm, the most strategic are focused on solving the real problem: How do you reliably deploy, govern and orchestrate AI at mission scale?

SAIC has identified six principles for lasting success. Here’s what they are—and how we’re operationalizing them.

Principle 1: Elevate AI to Infrastructure status.

We believe the future belongs not to those with the most powerful models, but to those with the most resilient infrastructure for AI lifecycle management and policy enforcement. Only by elevating AI to the status of infrastructure is it possible to achieve the level of mission alignment, resilience and scale required for defense and intelligence operations.

Such infrastructure must include a control plane that serves as the operating system for AI models (see Figure 1). It also requires a Composable Intelligence architecture that treats AI models as managed services within a governed ecosystem—not isolated black boxes hoping to work in production. The control plane plays a critical role in managing the full lifecycle of AI models—from integrating them into existing systems to orchestrating “model of models” and other ensemble model approaches to ensuring that models are hardened for adversarial protection.

With this approach, there’s no need to bet your mission on a single AI solution. Instead, you gain access to an entire ecosystem of models that work together, adapt dynamically and maintain performance under pressure.

Principle 2: Make it modular.

In SAIC’s Composable Intelligence architecture, AI models function like LEGO bricks—individually useful but transformative when combined. Unlike proprietary systems that lock you into single-vendor solutions, this approach makes every AI capability composable and interoperable. Whether it’s computer vision from Vendor A, natural language processing from Vendor B or symbolic reasoning from your internal team, SAIC’s AI Model Control Plane orchestrates them into unified workflows. Models become plug-and-play components in larger decision-making systems. Consequently, you’re never locked into a single AI vendor or approach. As new capabilities emerge, integrate them seamlessly. As old models grow obsolete, swap them out without system-wide disruption.

Principle 3: You can’t bypass governance.

Most AI systems treat security and compliance as checkbox exercises that get bolted on after deployment. It’s an approach that fails catastrophically in high-stakes environments in which people’s lives—and the nation’s security—are on the line.

The opposite is true at SAIC, where we architect governance into the DNA of every AI interaction. Our AI Model Control Plane serves as a central construct for registering, governing, orchestrating, monitoring and executing models. It makes it impossible to bypass security protocols, access unauthorized models or violate operational policies.

When every model invocation is authenticated, audited and aligned with mission requirements, you get AI you can trust, audit and defend to leadership. So, when congressional committees inquire about your AI decisions, you’ll have answers.

A Foundation for AI FinOps Organizations will face increasingly complex costs as AI workloads diversify across training, fine-tuning, inference, agentic orchestration and on-demand “as a service” model calls (e.g., token-based usage of foundation models). As part of AI lifecycle management, a control plane enables a new frontier of financial transparency and cost optimization: AI FinOps. Through its auditable execution layer and policy-governed invocation, the control plane allows fine-grained logging of resource utilization by model, user, task and mission. That enables cost attribution, budgeting, and throttling at the model level—transforming abstract concerns about “model sprawl” and escalating cloud expenses into actionable insights for CIOs, CFOs and mission leaders seeking cost-performance optimization across their AI investments. |

Principle 4: Build for the fight.

In Silicon Valley, engineers design AI for consumer apps and enterprise productivity. At SAIC, we design AI for contested and multi-domain environments where adversaries are actively trying to break your systems. It’s a fundamental difference in mindset and engineering approach: Every component of our AI infrastructure assumes adversarial conditions. We embed detection mechanisms for model poisoning, input manipulation and confidence attacks. And our systems don’t just perform under ideal conditions. They also maintain effectiveness when under assault. These capabilities are critical to maintaining decision superiority even when the enemy targets your cognitive infrastructure.

Principle 5: Make trust tangible.

When deploying and using AI, trust must be a measurable property that can be quantified, monitored and enforced through technology. SAIC’s systems don’t require blind trust of AI. Rather, they give you the tools to verify that trust is warranted. For example, every AI output comes with explainable provenance: which models were used, why they were selected, what data informed the decision and how confident the system is in its recommendations.

In addition, trust scores are continuously updated based on real-world performance, not laboratory benchmarks. As a result, your operators know exactly what they’re looking at and why. They can make informed decisions about when to rely on AI recommendations vs. when to override them. In this scenario, human-machine teaming becomes a genuine partnership.

Principle 6: Aim for flexibility without chaos.

The operational tempo of modern missions demands AI systems that can adapt on the fly. Yet adaptation without governance leads to chaos. SAIC has solved the paradox of simultaneous flexibility and control. Our semantic flexibility architecture lets you reconfigure AI workflows in real-time without reengineering underlying models. Need to shift from threat detection to damage assessment? The system dynamically recomposes available models to match your evolving mission needs, all within policy guardrails. In other words, you respond to changing conditions at machine speed while maintaining human oversight—and your AI infrastructure becomes as agile as your mission requirements without sacrificing reliability or accountability.

How to get started

To thrive in the AI era, you don’t need the fanciest models—you need the most reliable AI infrastructure. Here’s how to start positioning your organization for long-term mission success:

| Three immediate steps |

|

| Three strategic investments |

|

| Three crucial cultural shifts |

|

Remember: The value of AI lies in amplifying human intelligence through governed, composable and resilient cognitive infrastructure. While many others are still trying to perfect the algorithm, SAIC is perfecting the system that makes algorithms useful, trustworthy and mission ready. Reach out to start building your AI infrastructure—and AI advantage—with SAIC.