LLMs Alone Aren’t Fit for Military and Intelligence Operations

Four Reasons to leverage Retrieval-Augmented Generation with Reasoning

With the ability to generate coherent, articulate responses, large language models (LLMs) have sparked genuine excitement in both commercial and government spaces. But here’s an uncomfortable reality: LLMs weren’t designed for military and intelligence missions. When examined through the lens of operational settings—where consequences are real and timelines are compressed—their limitations become readily apparent.

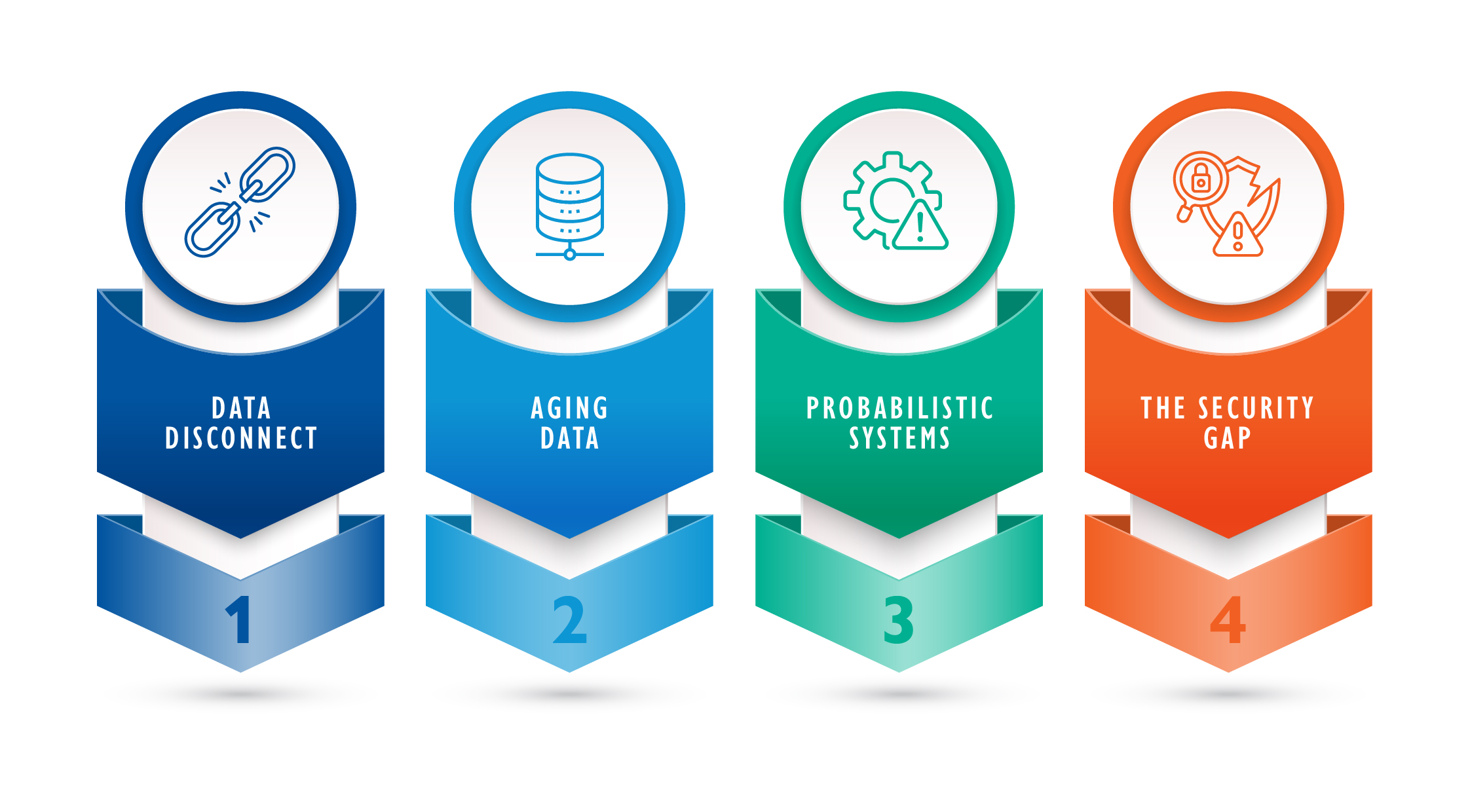

Reason #1: Data disconnect

The most fundamental issue is that these models aren’t connected to the right data. Foundational LLMs are trained on massive amounts of text scraped from public sources. This training enables them to learn the structure of human language and mimic reasoning patterns, but it doesn’t give them access to the knowledge a commander, analyst or operator actually needs in a battlespace.

For example, LLMs don’t know what was in yesterday’s Intelligence, Surveillance and Reconnaissance (ISR) feed. They don’t understand the nuances of an operations order (OPORD) that just got signed. Nor do they have access to raw intelligence, mission logs, platform specs or red team assessments. Why does this disconnect from mission-specific, enclave-bound data matter? It’s critical because when an LLM answers a question, it doesn’t reach into a database of verified truths. It generates a response based on the statistical likelihood of certain word patterns.

The result may sound articulate, but it may be completely wrong. Either way, it will sound confident—often more so than a human would in the same situation. This phenomenon is known as hallucination, and it occurs when the model outputs something that isn’t grounded in fact but presents it as if it were. In a commercial setting, a hallucinated answer might lead someone to double-check; in a mission setting, it could lead to operational delay or failure.

Reason #2: Aging data

Click image to enlarge

Another limitation of LLMs is that they age poorly. The knowledge they contain was “frozen” when they were trained, often months or years before deployment. So while a human analyst reads the morning brief or an operations officer adjusts plans based on last night’s intelligence reports, a base LLM has no awareness of change. The adversary’s tactics, techniques and procedures (TTPs) may evolve, rules of engagement (ROEs) may shift or theater-level guidance may be updated—but the model can’t incorporate any of this into its outputs. Without a method to inject fresh information in real time, the model’s data becomes stale and its output drifts.

Even more concerning is that LLMs aren’t trained to understand the context of military problems. What’s essential to military operations is severely lacking in model training. They don’t recognize doctrinal constructs, and they don’t know the difference between fires and effects or between order of battle and scheme of maneuver. They aren’t built to reason through a kill chain, an acquisition lifecycle or a complex multi-domain targeting cycle. What they offer in general fluency, they lack in mission specificity.

Reason #3: Probabilistic systems

This is really the foundational mismatch: that LLMs are built and trained to be probabilistic, not deterministic systems. In a deterministic system, the same input yields the same output—a critical capability in the flow of military operations. Non-deterministic systems might be tolerable in consumer chatbots. Yet in environments where traceability, auditability and consistency are required, non-deterministic systems quickly become a liability.

A commander doesn’t want five different answers to the same question, each based on a different statistical guess. A commander requires a discrete, accurate and insightful answer.

Reason #4: The security gap

Finally, most commercial LLMs weren’t designed to run on air-gapped networks. They require cloud infrastructure or external APIs. They lack native access control, classification enforcement and zero-trust security features. They’re not built with the assumption that data might be classified, compartmentalized or releasable only under specific caveats.

Why ‘build your own’ and ‘fine-tune’ fall short

As commanders and senior leaders evaluate how to responsibly adopt generative AI, it’s natural to ask: If frontier LLMs alone aren’t good enough, why not build a better one ourselves? Or fine-tune an existing commercial model to our needs?

On the surface, either approach appears to offer a workaround. But dig into operational realities—classified environments, high op tempo, evolving threats and limited resources—and it becomes clear that both approaches are untenable.

Training a large-scale LLM from scratch is technically feasible, but the costs in time, money and manpower are staggering. A custom model with even mid-tier capabilities requires tens of millions of dollars in compute resources, specialized engineering teams and a 12-to-18-month development effort. And that’s before factoring in the burden of security accreditation, data curation and sustainment.

Further, a model built from scratch would still suffer from the same failures that make general-purpose LLMs problematic in the first place. It would hallucinate when uncertain, grow stale unless constantly updated, and lack access to the ever-evolving mission data needed to ground its reasoning in reality.

Fine-tuning a commercial or open-source model would seem like an efficient alternative—and in some narrow use cases, it could be. But this approach introduces operational instability that most cells can’t afford. Fine-tuned models are inherently brittle and unable to account for ever-changing conditions and contexts. They may improve performance on a particular task, but that performance is often dependent on consistency. The moment a new adversary behavior emerges or the mission shifts to a new domain, the model’s knowledge lags and outputs become less trustworthy.

Retraining and revalidating the model to reflect the new reality can take weeks or months. By then, the operational window may have closed. Fine-tuning also leads to fragmentation. Each mission set may require its own version of the model tuned to its particular needs. What was once intended as a shared capability becomes a patchwork of inconsistent systems—a compatibility and configuration management nightmare.

What’s a better approach for military and intelligence missions?

What’s needed is not a new model, but a new method. It needs to let you plug generative reasoning into the data you already trust—and be designed to incorporate updates as fast as the mission changes. It’s like asking an officer to write a course of action (COA) brief. You wouldn’t expect them to do it from memory. Instead, you would give them the current CONOP, threat report, ROEs and logistics tables. Then they would reason through the source material to formulate their recommendations.

Now imagine a system where the AI does the same—combining the reasoning power of language models with real-time access to authoritative, mission-specific data. That’s Retrieval-Augmented Generation with Reasoning (RAG-R). It solves the problems that make LLMs insufficient on their own, turning them into genuine decision aids that can keep pace with the operational tempo.

The second post of this series will dive into how RAG-R works, why it’s a game-changer for military and intelligence operations, and how making a peanut butter and jelly sandwich relates to building trustworthy AI systems for the battlespace.